As a doctoral student, I took a multivariate statistics class with SAS. I took a time series class that used R. I had a SEM course using LISREL. My methods guru used Stata, and my adviser SPSS (yes, there is a reason I didn’t link to SPSS). Needless to say, I had a diverse background in statistics software.

Over the years I used, and taught with, mostly Stata, while trying to keep up with R.

This year, I’m all in with R.

Why? Because point and click statistics only makes the researcher degrees of freedom problem worse. Others much more qualified than me have spoken on this, but I think the general trend towards using context-menu based software has resulted in a ‘dumbing-down’ effect in the quality of empirical analyses. It’s not because researchers are dumber, to be clear, it’s that context menu software makes running analyses without understanding what’s going on under the hood far too simple.

Case in point that I use in class a lot? Cronbach’s alpha.

Here’s the formula for Alpha…

\(\alpha=\frac{N*\bar{c}}{\bar{v}+(N-1)*\bar{c}}\)

Without going through the details, \(N\) is the number of indicators being evaluated, and \(\bar{c}\) is the average inter-item covariance. The kicker with alpha is that a large number of indicators will inflate alpha, even in the presence of a low correlation between the actual indicators themselves.

So far so good, but lets look at the output from a common point and click software package—I don’t actually have a license for it, so I’m borrowing this image from the very excellent UCLA stats page.

Notice there is nothing in the output about the average inter-item covariance or correlation. My argument is that SPSS is one reason why we have so many 20+ item psychometric scales floating around with questionable reliability.

Now lets take a look at R, with the popular psych package…

library(psych)

alpha(my.df)

##

## Reliability analysis

## Call: alpha(x = my.df)

##

## raw_alpha std.alpha G6(smc) average_r S/N ase mean sd

## 0.83 0.83 0.76 0.61 4.7 0.028 4 1.2

##

## lower alpha upper 95% confidence boundaries

## 0.77 0.83 0.88

##

## Reliability if an item is dropped:

## raw_alpha std.alpha G6(smc) average_r S/N alpha se

## RISK1 0.78 0.78 0.64 0.64 3.6 0.040

## RISK2 0.71 0.71 0.55 0.55 2.4 0.054

## RISK3 0.78 0.78 0.64 0.64 3.6 0.040

##

## Item statistics

## n raw.r std.r r.cor r.drop mean sd

## RISK1 116 0.84 0.85 0.72 0.66 4.0 1.4

## RISK2 116 0.89 0.89 0.81 0.73 3.9 1.4

## RISK3 116 0.85 0.85 0.73 0.66 4.1 1.4

##

## Non missing response frequency for each item

## 1 2 3 4 5 6 7 miss

## RISK1 0.03 0.13 0.15 0.35 0.20 0.11 0.03 0

## RISK2 0.05 0.16 0.19 0.22 0.28 0.09 0.02 0

## RISK3 0.06 0.09 0.15 0.28 0.28 0.13 0.02 0

The data source isn’t important, but beyond the terrific output we see that the average_r—the average inter-item correlation is pretty good, .61. The alpha itself is .83, well within the recommended range.

Now lets take a look at a nine item scale…

library(psych)

alpha(my.df2)

##

## Reliability analysis

## Call: alpha(x = my.df2)

##

## raw_alpha std.alpha G6(smc) average_r S/N ase mean sd

## 0.85 0.85 0.88 0.39 5.9 0.021 4.1 1.1

##

## lower alpha upper 95% confidence boundaries

## 0.81 0.85 0.89

##

## Reliability if an item is dropped:

## raw_alpha std.alpha G6(smc) average_r S/N alpha se

## INN1 0.83 0.83 0.86 0.38 4.9 0.025

## INN2 0.83 0.84 0.85 0.39 5.1 0.024

## INN3 0.84 0.85 0.86 0.41 5.5 0.022

## PRO1 0.83 0.84 0.85 0.39 5.1 0.023

## PRO2 0.84 0.84 0.86 0.40 5.4 0.022

## PRO3 0.86 0.86 0.89 0.44 6.3 0.020

## RISK1 0.83 0.83 0.86 0.38 5.0 0.024

## RISK2 0.83 0.83 0.85 0.37 4.8 0.024

## RISK3 0.83 0.83 0.86 0.38 4.9 0.024

##

## Item statistics

## n raw.r std.r r.cor r.drop mean sd

## INN1 113 0.76 0.74 0.70 0.65 3.9 1.9

## INN2 113 0.72 0.70 0.68 0.61 4.2 1.7

## INN3 113 0.64 0.62 0.58 0.52 3.8 1.7

## PRO1 113 0.70 0.70 0.67 0.60 4.1 1.6

## PRO2 113 0.65 0.64 0.59 0.53 4.3 1.7

## PRO3 113 0.46 0.48 0.36 0.33 4.5 1.4

## RISK1 113 0.71 0.72 0.68 0.62 4.0 1.4

## RISK2 113 0.75 0.77 0.75 0.68 3.8 1.4

## RISK3 113 0.73 0.75 0.72 0.65 4.1 1.4

##

## Non missing response frequency for each item

## 1 2 3 4 5 6 7 miss

## INN1 0.12 0.23 0.06 0.17 0.18 0.16 0.09 0

## INN2 0.06 0.18 0.11 0.19 0.20 0.18 0.08 0

## INN3 0.10 0.13 0.20 0.20 0.18 0.13 0.05 0

## PRO1 0.08 0.11 0.12 0.26 0.25 0.14 0.05 0

## PRO2 0.09 0.08 0.12 0.21 0.25 0.18 0.08 0

## PRO3 0.04 0.09 0.09 0.20 0.36 0.18 0.04 0

## RISK1 0.04 0.13 0.14 0.35 0.20 0.12 0.02 0

## RISK2 0.05 0.15 0.19 0.22 0.28 0.09 0.01 0

## RISK3 0.06 0.10 0.15 0.27 0.28 0.12 0.02 0

Our alpha went up to .85—even better than before! But the average_r dropped to .39. The formula is biased by the large number of indicators, not because the scale actually has higher internal reliability.

Ok, I’m not being entirely fair to SPSS, and its always the responsibility of the researcher to understand the nuts and bolts of any analysis he or she runs.

Nonetheless, what I love about R is that it facilitates—empowers—a deeper understanding of the statistical tools that most applied researchers use on a daily basis. Rather than moving more and more of the stats to the background, R brings it to the foreground, giving the analyst the information to draw better conclusions. The added bonus? The R community is so large now that if you are stuck or having trouble, a quick search is usually all you need to find the answer.

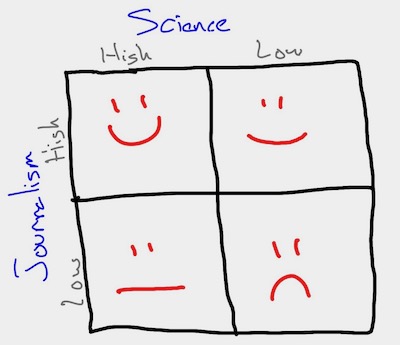

The bottom line? R is a tool for data science, and given the replication crisis, we could all use a little more science, and the tools that support it.