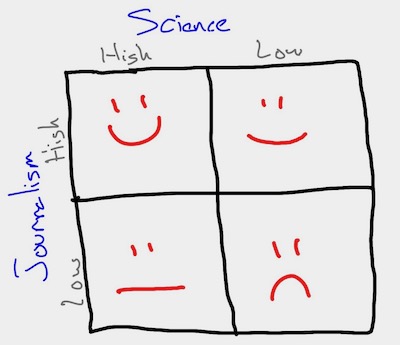

I’ve drawn a version of that graphic dozens of times now when talking to PhD students about publishing in management/entrepreneurship. The purpose of the graphic is to talk about publishing probabilities based on a given paper’s strengths—its ability to draw causal inference (good science), or its ability to tell an interesting and compelling story (good journalism).

As a field, we have a silly devotion to ‘making a theoretical contribution’ as a standard for publication. The necessity for each study to bring something new to the table is the exact opposite of what we should want as scientists—trustworthy, replicable results—that imbue confidence in a model’s predictions.

Now, the happiest face, and hence the highest publication probability, is absolutely a paper that addresses an important topic, is well written and argued, AND has a high quality design with supporting empirics. This should be, of course, the goal. Producing such work consistently, however, is not easy. In our publish or perish world, promotion and tenure standards call for a portfolio of work, at least some of which is not likely to fall in our ideal box. So the question becomes, as a field, should we favor high quality science that may address less interesting topics or simple main effects? Or, should we favor papers that speak to an interesting topic but with research designs that represent a garden of forking paths and have less trustworthy results?

To put it another way, what matters more to our field, internal validity or external validity?

Again the ideal is that both matter, although I’m in the camp that internal validity is a necessary element for external validity—what’s the point of a generalizable finding that is wrong in the first place? But when it comes to editorial decisions—and I’ve certainly seen this in my own work—I would argue that as a field good journalism improves the odds of publication even with questionable empirics. I don’t have any data to support my hypothesis, although I typically don’t get much resistance when I draw my picture during a seminar.

Fortunately though, I think we’re slowly changing as a field. The increasing recognition of the replication in science broadly and in associated fields like psychology and organizational behavior will, over time I believe, change the incentive structure to favor scientific rigor over journalistic novelty. Closer to my field, the encouraging changes in the editorial policies of Strategic Management Journal may help tilt the balance in favor of rigor.

In the spirit then of Joe Simmons’ recent post on prioritizing replication, I’d like our field to demonstrably lower the bar for novel theoretical insights in each new published study. It is, to me, what is holding our field back from bridging the gap between academia and practice—why should we expect a manager to use our work if we can’t show that our results replicate?

One thought on “Science and journalism in academic publishing”